Language models’ training data are the source of all their knowledge. Massive collections of unstructured text are given to the model over the course of training (a months long process). The data influence the parameters of the model, an encoding of all it knows. Once the data are in the model, it is generally not possible to examine them (they are not stored in any database). The model is completely fixed unless the expensive training process resumes.

The quality of a model depends on the quality of the data: garbage in, garbage out. OpenAI is getting sued regularly over what it puts in its training mix. So what actually is being fed to our models?

Before taking a closer look at all the popular datasets, there are some big takeaways:

The vast majority of data (90%+) come from internet scraping.

There is very little non-English data fed to most (American-made) models, often with zero Chinese.

High-quality data are sparse, and much of it is copyrighted (litigants have a strong case against AI companies).

Even the “high-quality” fine-tuning data are not particularly high-quality.

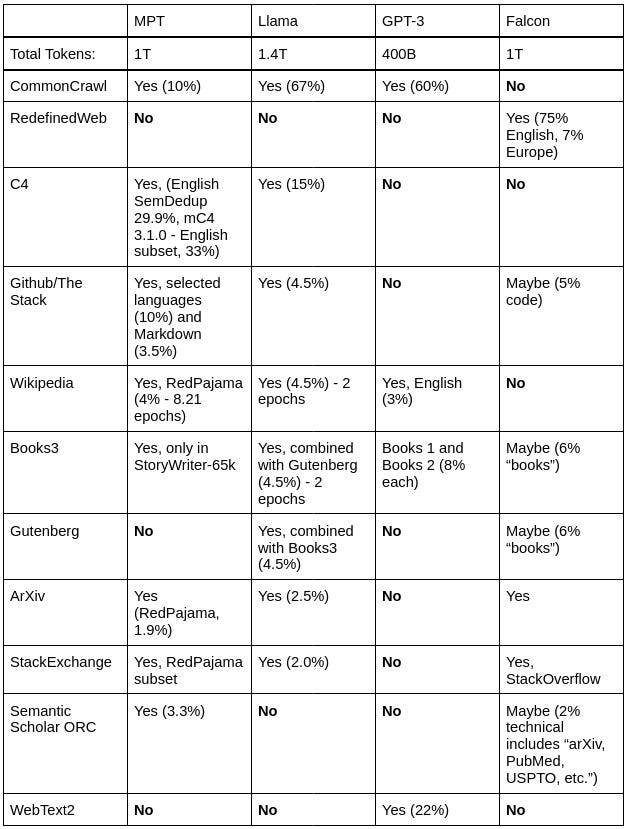

At a glance, you can see some datasets used for popular models below. The information is taken from the papers and blog posts that accompanied those models’ releases.

MPT, Llama, and Falcon are three of the most popular open source models. Their quality appears to be somewhere between GPT-3 and ChatGPT (GPT 3.5), but additional fine-tuning and reinforcement learning might increase their performance to near GPT-4 levels. MPT is MosaicML’s offering (recently purchased by Databricks); Llama is Meta’s project; and Falcon is from a relatively unknown research institute in Abu Dhabi, the Technology Innovation Institute (TTI). Falcon performs surprisingly well on HuggingFace’s Open LLM Leaderboard. Each of those models comes in different sizes, the smallest of which are trained on the least number of tokens (tokens can be thought of as words).

As for GPT-4, OpenAI has intentionally kept private the data the model was trained on. In the GPT-4 technical report, OpenAI says that it was trained on both public and privately licensed data. We can assume that the data mix is comparable to the open models, but there are obviously differences: for one, GPT-4 is capable of reading and writing Chinese.

One last thing to keep in mind: the goal of a language model is fundamentally to predict text. In reading the examples below, consider how feasible it is to predict what comes next based on the previous text. Could you do it? What would you have to know about the world to guess the next word successfully? Those questions are at the heart of the new AI.

Web Scraping

From the table, you can see that completely random web content makes up a perhaps surprisingly large proportion of the data. The most popular web-scraping dataset is from Common Crawl, a non-profit (C4 and mC4 are derivatives). The data consist of 40+ languages, but the vast majority are filtered out before training begins (ostensibly so as to not confuse the model). Common Crawl has been collecting data for 7 years monthly or bi-monthly by randomly sampling a subset of the internet. The most recent crawl is about 390 terabytes.

Common Crawl follows rules against web scraping: if a website has “CCBot” in its robots.txt file, the bot will not crawl the site. Of course, less scrupulous companies can simply ignore it.

To get a feel for the data, below are two examples.

Common Crawl Example 1:

"Konstantinos Papachristou The Darkness of a Fairy Tale “We are living in a world where we are constantly bombarded by advertisements…” “We have lost the true meaning of life…” The Darkness of a Fairy Tale is the debut album of saxophone player and composer Konstantinos Papachristou, and describes the journey of a man that receives his wake-up call and begins an esoteric quest in an effort to find the true meaning of life. Konstantinos composed a contemporary jazz concept-album, with an often dark, programmatic intention, that allures the listener to dive deep into his senses. The arrangement retains an unrefined classic jazz sound, and the instrumentation includes a double bass, piano and drums that blend perfectly with the composer’s fat sounding, tenor saxophone and strong influence of the ECM Jazz label. Been a very creative person, Konstantinos loves music as well as High-End Audio, and he has been involved in various music projects, and has also designed High-End Audio Equipment for consumer and professional use. He supervised the whole production process of the album, and imprinted his personal standpoint in the sound of the album. Darkness of a Fairy Tale retains a live feel with the calmness and focus of a studio album, making it the best of both worlds. Darkness of a Fairy Tale is released in an aesthetic digipak audio-CD featuring cover art from the stunning original painting “Jours et Nuits No4” (acryl on canvas, 1996, Vangelis Papachristos) and also on every popular digital music store world wide. You can like Konstantinos’ facebook page here: https://www.facebook.com/KonstPapachristou/ Τελευταίες κυκλοφορίες Kaisarias str 101, 18450 Nikaia, Greece"Common Crawl Example 2:

"एसबीएस हिंदी से जुड़े रहिएं Meanings of lesbian in hindi Lesbian themes in Hindi cinema Nazshura 13.08.2018 1 Hindi lesbian video Mezikus 13.08.2018 1 Comments There was a big production house involved and we were launching leads. Their relationship remained ambiguous in the end, but it sparked conversations on lesbian relationships. But there is hope for the future with the younger generation being open-minded in this regard. They have what is most important in a film - a story. He recounts that back in , there was hardly any original content from India. Starring Sunny Leone and Sandhya Mridul, there is a passionate kiss between the two female protagonists of the film. The homosexuality is subtle but obvious and makes the film a pure delight to watch, especially when viewers are under the false impression that the love story is between Arshad-Huma and Madhuri and Naseeruddin. This is a refreshing change from the usual stereotypes seen on the silver screen in India. Both Isha and Amrita received flak for doing such a film as that time, Bollywood and viewers were still opening up to the theme of sexuality, something that was not exploited too much on the silver screen. Siras craves companionship and a human connection, which never comes. However, even with so many movies being produced, there are only a handful that deal with LGBT themes or have significant queer characters in them. Fire is a beautiful story of two women who are married to two brothers who neglect them and end up having a relationship with each other, thus exploring their sexuality. But it is also about ageing, alienation and loneliness so acute that it renders you hollow.There are two main things to notice here: first, the data are completely unformatted. All of those ordering cues and side panels on a website show up in the data inline. Note the two truncated quotes at the start of example 1. It is pretty messy. Moreover, there is no filter for explicit themes: the second example discusses lesbianism in Hindi cinema. It is hard to predict what the model will learn.

Books

The “books” datasets contain highly coveted long-form text. If you would like a model to be able to maintain a train of thought over long sequences, you need text with long-term dependencies. That text almost uniquely comes in the form of books: non-fiction and fiction alike. Ideally, we would train on every book in existence (and the reality is not far off; there just aren’t a lot of them). The widely used books datasets are referred to as “books1”, “books2”, and “books3”.

Unfortunately, what exactly is in books1 and books2 is fairly mysterious. Some online speculation leans toward books2’s being libgen. Books3 contents are better known: it is all of bibliotik, a trove of pirated books (hence the cryptic pseudonym “books3”). Companies should be afraid to release models trained on books3: MosaicML has a separate series of models, StoryWriter, trained on books3 and licensed separately (probably still illegally, however). As for OpenAI, they have already been sued. The question is whether releasing a model trained on some data is akin to releasing the data itself.

On the other hand, Gutenberg is a repository of public-domain books. There is no worry about copyright infringement.

Multi-Lingual Data

While the internet has a lot of non-English data, most open models choose to focus on English. The data fed to Llama contains only languages having the Latin or Cyrillic alphabets. If you ask a question to Llama in Chinese, it will sputter (not so for ChatGPT, however). You can see in the table above and in the section on data-processing below which datasets were distilled into English for which models.

It is not surprising, then, when Chinese labs claim superiority to American models on Chinese-language tasks. In the linked table, ChatGLM-2 is a tiny (6b) model that supposedly outperforms GPT-4. At the bottom of the list is Llama. That it can make any sense in Chinese despite being trained on only the small amount of Chinese text that made it through a filter is surprising in itself - not that it performs so terribly. On the other hand, GPT-4 was probably trained on a lot more Chinese; that it ranks only number 2 is somewhat surprising. However, this fact may reveal that whatever Chinese text GPT-4 was trained on, it was probably limited.

Other Pre-Training Data

Rounding out the data mixes are typically scholarly or general knowledge texts, such as Wikipedia. These are high quality datasets with important information. Because there is no guarantee the model will learn facts present in the data upon just one viewing, these datasets are often trained over multiple times. Llama samples everything from Wikipedia twice on average.

ArXiv (pronounced “archive”) is a repository of academic papers from STEM fields. In Computer Science, it is popular to release a paper on ArXiv first as a pre-print. It is not surprising that the computer scientists who train models make sure that their own papers are included in the data.

Semantic Scholar is a more interdisciplinary platform, included in a few models.

Falcon goes above and beyond with regard to academic data: TII explicitly mentions training on PubMed and other repositories.

Many models are trained on code as well as text. This code mostly comes from GitHub, an online repository of open source software.

Lastly, companies typically add Stack Exchange data to the mix as well. Stack Exchange is a site of questions and answers. Stack Overflow is an analogous site just for code. This data is considered high-quality, especially for models designed to become chat bots. In the pre-processing step, Llama sorts answers by score (highest to lowest). See an example below from the Stack Exchange dataset available through Red Pajama.

"Q: Is it safe to always leave a Macbook Pro on? I have an external monitor, keyboard, and mouse connected to my Macbook Pro. I have the lid closed and have it running. I run it as if it were a desktop PC, always on. I only shut off the LCD attached to it. Is it safe to always have the Macbook Pro on and running? About every other week I let the battery drain almost completely. I also have a Belkin laptop cooling stand running underneath it. Is there anything else I can do to safely have my MBP running most of the time? Update: Just wanted to add that I have the newer 2010 Macbook Pro. A: Sure The current uptime on my MacBook is 21 days. One thing to note: * *I wouldn't drain the battery like that. Lithium ion batteries don't work like NiCa batteries with memory. If you have it continuously plugged in, charge to 60% and then remove the battery. A: Technically, yes, but from a long-term maintenance standpoint, I would reccommend at least rebooting once a week. I reboot all my Macs first thing Monday morning, after the Sunday night system maintenance runs, and I never have problems with crashes, memory and the like (unless, of course I do something stupid while programming). Even Mac OS X needs a quick refresh every now and then. A laptop is really not that much different from a desktop computer; it's still a hard drive, ram, cpu, keyboard, and mouse just in a compact form factor. Anything you would do with a desktop machine, do with your laptop. A: From a software standpoint, it's perfectly fine to leave your Mac on continuously. It can even be considered recommended, since Mac OS X has a number of optimization scripts that run on a daily, weekly, monthly basis, but can only do so if the computer is on. From a hardware standpoint, it depends on your model. If you have an older (pre-2008) MacBook with a removable battery, leaving it on and plugged in continuously will kill the battery quick. In that case, you should remove the battery from the machine, only putting it back in occasionally, charging it to around 60%, to keep it in good working order. The new MacBooks with non-removable batteries are designed to be able to be left plugged in constantly. The battery has circuitry to optimize the charge cycles so that the life of the battery is not adversely degraded over time (more than just normal use causes). "Data Processing

The most popular method for data processing is called the CCNet pipeline. It involves first de-duplicating the text and then applying a small language model to compare the given text to known high-quality data. If they seem similar according to the model, then the data are used. This pipeline is used in the RedPajama datasets, which were in turn used by MPT. The CCNet pipeline or something like it is generally applied to every new language model.

There are also a variety of heuristics used to prune the non-web-scraping datasets. For example, here are the criteria used by MPT to filter ArXiv papers:

Is open access

Has title and abstract

Paper is in English

>=500 words and >=5 paragraphs

Paper was published after 1970 and before 2022-12-01

Most frequent word in the paper consists of alphabetic characters only, and it appears in less than 7.5% of the document.

There is a lot of junk on the internet. Sometimes even the most basic rules make a difference.

Fine-Tuning Data

Fine-tuning data is what turns a regular language model into a chat bot. Applying this data follows the pre-training step, which involves all the data above. The data include question/answer pairs, usually hand-written by people in the Philippines or other developing countries. In the case of the Dolly-RLHF dataset from Databricks, the data were collected from “thousands of Databricks employees [and consists of] several behavioral categories.” Another popular dataset is the Anthropic “helpful and harmless ” dataset (used in MPT’s chat variant). It includes for some of the examples annotations regarding the answers’ helpfulness and harmlessness.

These datasets are typically very small. The main Dolly RLHF dataset is 15k examples: enough to fit on a floppy disk (compressed, probably).

Since people are being paid to generate the data, you might expect high-quality text. However, it is not clear if the story is as good as it sounds. Here are some typical examples from the Dolly RLHF dataset:

Q: "Why are In-N-Out cheeseburgers so good?"

A: "In-N-Out cheeseburgers are seen as good for a few reasons. The meat is never frozen and tight quality controls at their processing plants insure a consistent and high quality meat patty. The incentive structure for the In-n-out employee results in workers staying at the fast food chain longer with the manager frequently in charge of employees and the actual cooking of the burger patties. This method is opposite of competing fast food restaurants who assign the burger cooking to entry level employees and customer management to higher levels. In-n-out maintains a smaller menu focusing on a few key items instead of an expansive or seasonally themed menu making it easier to maintain high standards of quality. Lastly their slow growth and enthusiastic fans provide a positive atmosphere lending itself to people thinking more highly of In-n-out where without this influence might be neutral on the brand."Q: "What should be your day to day creator device ?"

A: "1. Iphone pro max 2. DJI OSMO pocket 2 DJI is such an awesome device to shoot as it has it's gimble and easy to operate. The video quality is actually nice"Both contain time-period specific brands (the first reading more like an ad for In-N-Out than anything, and who knows if it’s true). We would rather not throw away our data once a new iPhone comes out, yet the second example depends on the relevancy of the “Iphone pro max 2”. Moreover, I did not realize that a “day to day creator device” was a common product (it is slightly upsetting that such a question even makes sense). Anyway, now I know that the DJI OSMO pocket 2 DJI is “gimble [sic] and easy to operate.”

When handcrafting the data that will become our overlords’ personality, you might expect a team of great experts carefully tinkering on a robotic mind. Instead, some Databricks employee was just trying to meet a quota.

Conclusion

There is plenty of room at the bottom. Data quality has a clear impact on model quality. Still, what we put into our models can range from genius (ArXiv papers) to dumb (Dolly-RLHF) to simply malformed (web scrapes). Moreover, the rights to the highest quality data are still completely up in the air. Companies should focus on what is in the data, not just how much.

Gordon Kamer is the founder of U.S. Artificial Intelligence Inc. For comments or inquiries about the company, email gordon@us.ai.